Catalogue of technical dimensions

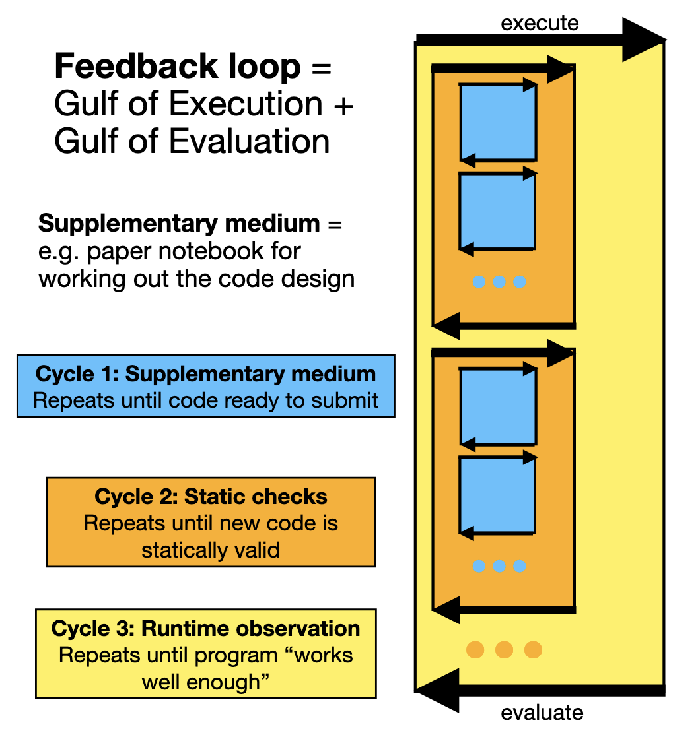

Technical dimensions break down discussion about programming systems along various specific "axes". The dimensions identify a range of possible design choices, characterized by two extreme points in the design space.

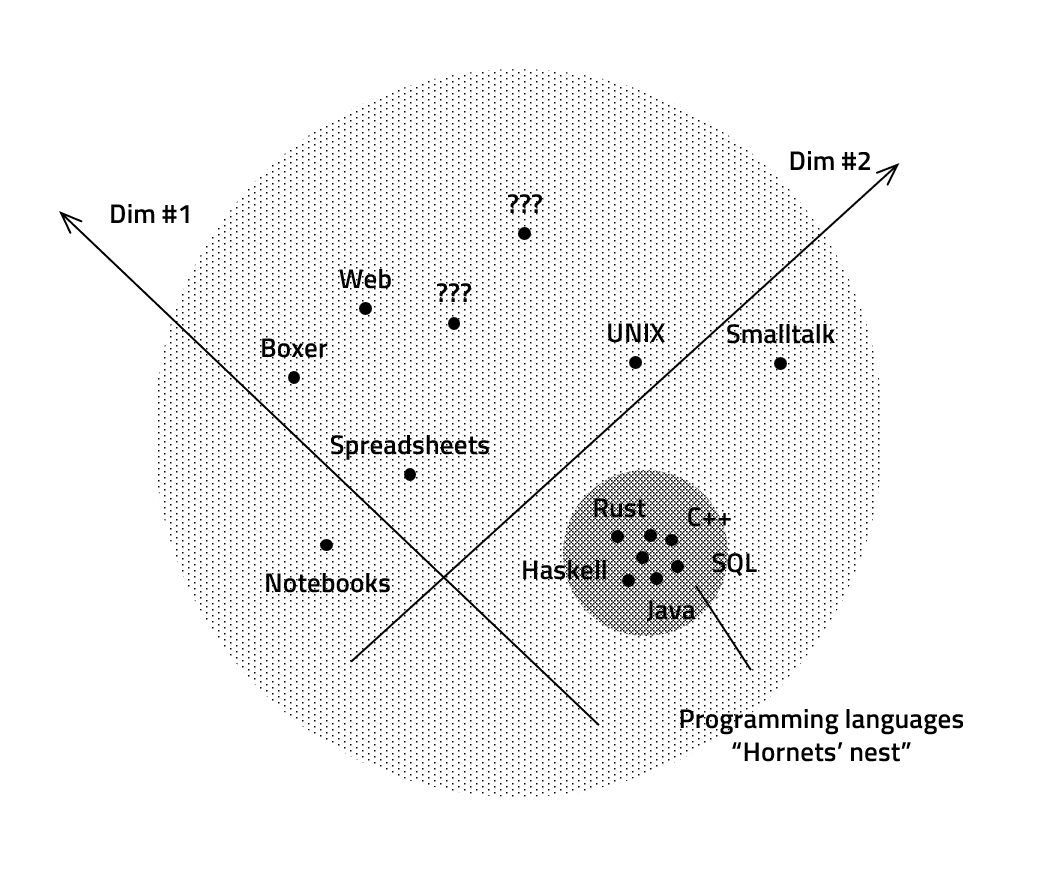

The dimensions are not quantitative, but they allow comparison. The extreme points do not represent "good" and "bad" designs, merely different trade-offs. The set of dimensions provides a map of the design space of programming systems (see diagram). Past and present systems serve as landmarks, but the map also reveals unexplored or overlooked possibilities.

The 23 technical dimensions are grouped into 7 clusters or topics of interest. Each cluster consists of individual dimensions, examples that capture a particular well-known value (or a combination of values), remarks and relations to other dimensions. We do not expect the catalogue to be exhaustive for all aspects of programming systems, past and future, and welcome follow-up work expanding the list.

Illustration of technical dimensions. The diagram shows a number of sample programming systems, positioned according to two hypothetical dimensions. Viewed as programming systems, text-based programming languages with debugger, editor and build tools are grouped in one region.

Technical dimensions of programming systems

Programming is done in a stateful environment, by interacting with a system through a graphical user interface. The stateful, interactive and graphical environment is more important than the programming language(s) used through it. Yet, most research focuses on comparing and studying programming languages and only little has been said about programming systems.

Technical dimensions is a framework that captures the characteristics of programming systems. It makes it possible to compare programming systems, better understand them, and to find interesting new points in the design space of programming systems. We created technical dimensions to help designers of programming systems to evaluate, compare and guide their work and, ultimately, stand on the shoulders of giants.

Where to start to learn more

-

Want to delve into the details and analyse your system?

Start from the catalogue of technical dimensions -

Want to explore our framework by example?

Start from good old programming systems -

Want to see how this helps us understand programming systems?

Start from a summary matrix of system and dimensions -

Read about our motivation, methodology and evaluation?

Start from our paper about technical dimensions

Related papers and documents

-

Read our ‹Programming› 2023 paper in a conventional format

Technical Dimensions of Programming Systems -

Check out tutorial slides with a structured summary of dimensions

Methodology of Programming Systems

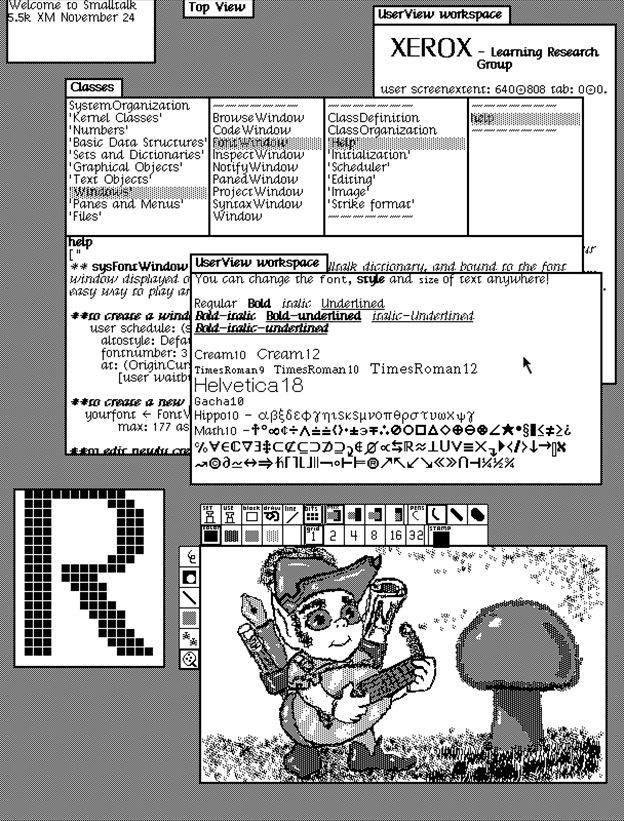

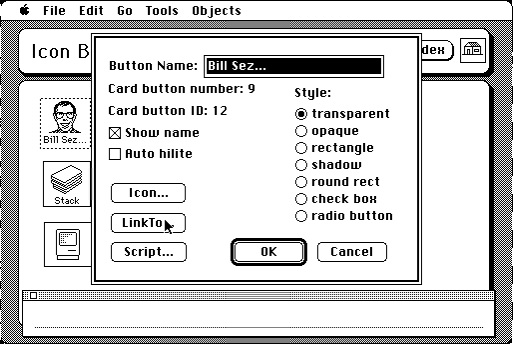

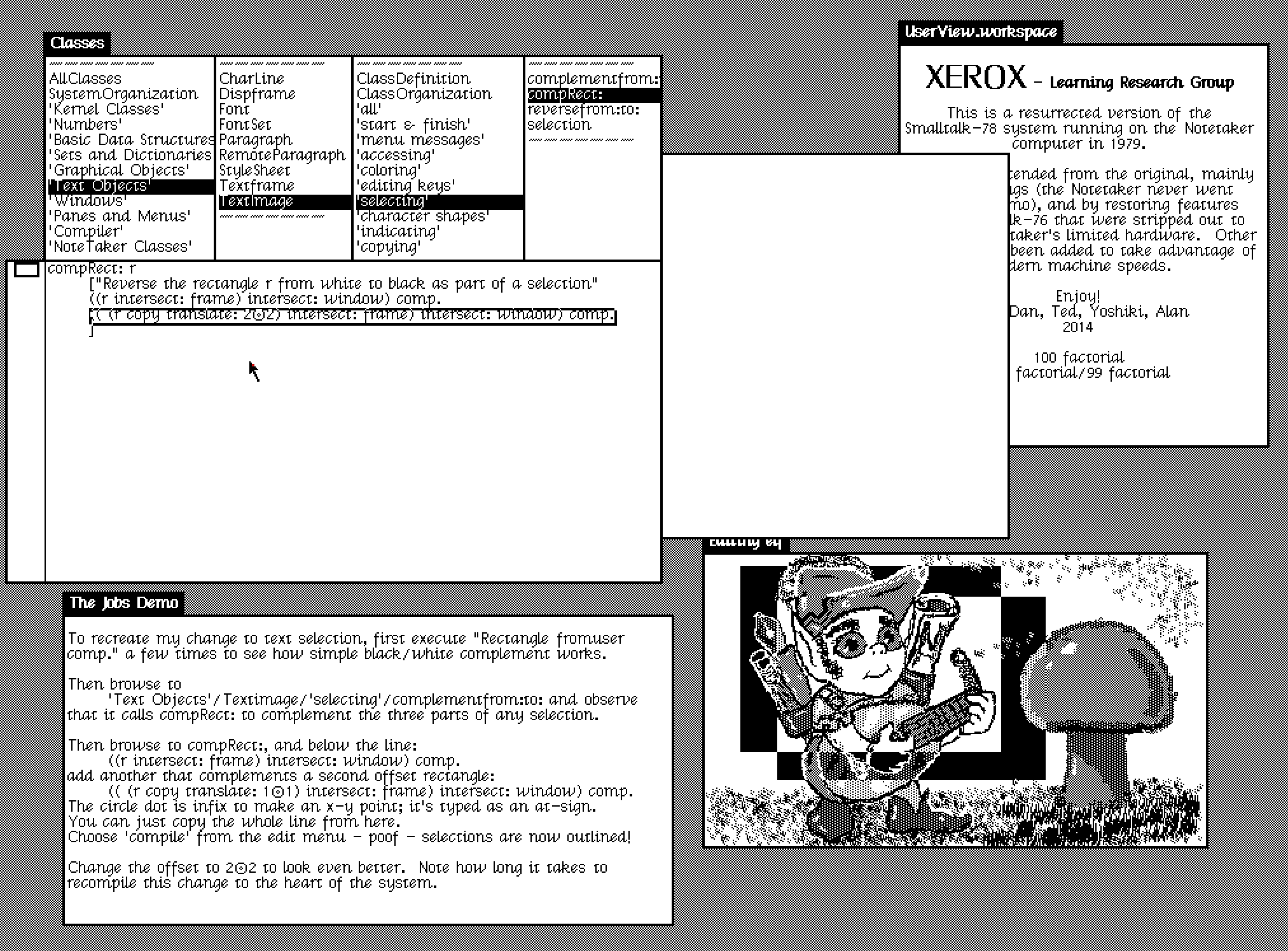

Smalltalk 76 programming environment. An example of a stateful programming environment with rich graphical user interface. In Smalltalk, the developer environment is a part of an executing program and the state of the program can be edited through object browser.

-

Want to delve into the details and analyse your system?

Start from the catalogue of technical dimensions -

Want to explore our framework by example?

Start from good old programming systems -

Want to see how this helps us understand programming systems?

Start from a summary matrix of system and dimensions -

Read about our motivation, methodology and evaluation?

Start from our paper about technical dimensions -

Want to the Technical dimensions welcome page?

Go back and choose a different route

Matrix of systems and dimensions

The matrix shows the differences between good old programming systems along the dimensions identified by our framework. For conciseness, the table shows only one row for each cluster of dimensions, which consists of multiple separate dimensions each.

The header colors are used to mark systems that are similar (in an informal sense) for a given dimension. Icons indicate a speficic characteristics and should help you find connections between systems. You can click on the header to go to a relevant paper section, but note that not all cases are discussed in the paper.

| LISP machines | Smalltalk | UNIX | Spreadsheets | Web platform | Hypercard | Boxer | Notebooks | Haskell | |

|---|---|---|---|---|---|---|---|---|---|

| Interaction | |||||||||

| Notation | |||||||||

| Conceptual structure | |||||||||

| Customizability | |||||||||

| Complexity | |||||||||

| Errors | |||||||||

| Adoptability |

Select systems and dimensions to compare in the table

Bibliography

- 1 Ankerson, Megan Sapnar. 2018. Dot-Com Design: The Rise of a Usable,Social, Commercial Web. NYU Press.

- 2 Basman, Antranig, Clayton Lewis, and Colin Clark. 2018. “The OpenAuthorial Principle: Supporting Networks of Authors in CreatingExternalisable Designs.” In Proceedings of the 2018 ACM SIGPLANInternational Symposium on New Ideas, New Paradigms, and Reflections onProgramming and Software, 29–43.

- 3 Basman, Antranig, L. Church, C. Klokmose, and Colin B. D. Clark. 2016.“Software and How It Lives on: Embedding Live Programs in the WorldAround Them.” In PPIG.

- 4 Basman, Antranig. 2016. “Building Software Is Not (yet) a Craft.” InProceedings of the 27th Annual Workshop of the Psychology ofProgramming Interest Group, PPIG 2016, Cambridge, UK, September 7-10,2016, edited by Luke Church, 32. Psychology of Programming InterestGroup. http://ppig.org/library/paper/building-software-not-yet-craft.

- 5 Basman, Antranig. 2021. “Infusion Framework and Components.” 2021.https://fluidproject.org/infusion.html.

- 6 Bloch, Joshua. 2007. “How to Design a Good API and Why It Matters.”2007.http://www.cs.bc.edu/~muller/teaching/cs102/s06/lib/pdf/api-design.

- 7 Borowski, Marcel, Luke Murray, Rolf Bagge, Janus Bager Kristensen,Arvind Satyanarayan, and Clemens Nylandsted Klokmose. 2022. “Varv:Reprogrammable Interactive Software as a Declarative Data Structure.” InCHI Conference on Human Factors in Computing Systems. CHI ’22. NewYork, NY, USA: Association for Computing Machinery.https://doi.org/10.1145/3491102.3502064.

- 8 Brooks, FP. 1995. “Aristocracy, Democracy and System Design.” In TheMythical Man Month: Essays on Software Engineering. Addison-Wesley.

- 9 Brooks, Frederick P. 1978. The Mythical Man-Month: Essays on Softw.1st ed. USA: Addison-Wesley Longman Publishing Co., Inc.

- 10 Chang, Hasok. 2004. Inventing Temperature: Measurement and ScientificProgress. Oxford: Oxford University Press.

- 11 Chang, Michael Alan, Bredan Tschaen, Theophilus Benson, and Laurent Vanbever. 2015a. “Chaos Monkey: Increasing SDN Reliability ThroughSystematic Network Destruction.” In Proceedings of the 2015 ACMConference on Special Interest Group on Data Communication, 371–72.SIGCOMM ’15. New York, NY, USA: Association for Computing Machinery.https://doi.org/10.1145/2785956.2790038.

- 12 Chang, Michael Alan, Bredan Tschaen, Theophilus Benson, and Laurent Vanbever. 2015b. “Chaos Monkey: Increasing SDN Reliability Through SystematicNetwork Destruction.” SIGCOMM Comput. Commun. Rev. 45 (4): 371–72.https://doi.org/10.1145/2829988.2790038.

- 13 Chisa, Ellen. 2020. “Introduction: Error Rail and Match with

DB::get.”2020. https://youtu.be/NRMmy9ZzA-o. - 14 Coda. 2022. “Coda: The Doc That Brings It All Together.”https://coda.io.

- 15 Czaplicki, Evan. 2018. 2018.https://www.youtube.com/watch?v=uGlzRt-FYto.

- 16 Edwards, Jonathan, Stephen Kell, Tomas Petricek, and Luke Church. 2019.“Evaluating Programming Systems Design.” In Proceedings of 30th AnnualWorkshop of Psychology of Programming Interest Group. PPIG 2019.Newcastle, UK.

- 17 Edwards, Jonathan. 2005a. “Subtext: Uncovering the Simplicity ofProgramming.” In Proceedings of the 20th Annual ACM SIGPLAN Conferenceon Object-Oriented Programming, Systems, Languages, and Applications,505–18. OOPSLA ’05. New York, NY, USA: Association for ComputingMachinery. https://doi.org/10.1145/1094811.1094851.

- 18 Edwards, Jonathan. 2005b. “Subtext: Uncovering the Simplicity of Programming.”SIGPLAN Not. 40 (10): 505–18.https://doi.org/10.1145/1103845.1094851.

- 19 Felleisen, Matthias, Robert Bruce Findler, Matthew Flatt, ShriramKrishnamurthi, Eli Barzilay, Jay McCarthy, and Sam Tobin-Hochstadt.2018. “A Programmable Programming Language.” Commun. ACM 61 (3):62–71. https://doi.org/10.1145/3127323.

- 20 Feyerabend, Paul. 2011. The Tyranny of Science, Cambridge: Polity Press. ISBN 0745651895

- 21 Floridi, Luciano, Nir Fresco, and Giuseppe Primiero. 2015. “OnMalfunctioning Software.” Synthese 192 (4): 1199–1220.

- 22 Foderaro, John. 1991. “LISP: Introduction.” Commun. ACM 34 (9): 27.https://doi.org/10.1145/114669.114670.

- 23 Fowler, Martin. 2010. Domain-Specific Languages. Pearson Education.

- 24 Fresco, Nir, and Giuseppe Primiero. 2013. “Miscomputation.” Philosophy& Technology 26 (3): 253–72.

- 25 Fry, Christopher. 1997. “Programming on an Already Full Brain.” Commun.ACM 40 (4): 55–64. https://doi.org/10.1145/248448.248459.

- 26 Fuller, Matthew et al. 2008. Software Studies: A Lexicon. Mit Press.

- 27 Gabriel, Richard P. 1991. “Worse Is Better.” 1991.https://www.dreamsongs.com/WorseIsBetter.html.

- 28 Gabriel, Richard P. 2008. “Designed as Designer.” In Proceedings of the 23rd ACMSIGPLAN Conference on Object-Oriented Programming Systems Languages andApplications, 617–32. OOPSLA ’08. New York, NY, USA: Association forComputing Machinery. https://doi.org/10.1145/1449764.1449813.

- 29 Gabriel, Richard P. 2012. “The Structure of a Programming Language Revolution.” InProceedings of the ACM International Symposium on New Ideas, NewParadigms, and Reflections on Programming and Software, 195–214.Onward! 2012. New York, NY, USA: Association for Computing Machinery.https://doi.org/10.1145/2384592.2384611.

- 30 Gamma, Erich, Richard Helm, Ralph E. Johnson, and John Vlissides. 1995.Design Patterns: Elements of Reusable Object-Oriented Software.Reading, Mass: Addison-Wesley.

- 31 Glide. 2022. “Glide: Create Apps and Websites Without Code.”https://www.glideapps.com.

- 32 Goodenough, John B. 1975. “Exception Handling: Issues and a ProposedNotation.” Commun. ACM 18 (12): 683–96.https://doi.org/10.1145/361227.361230.

- 33 Gosling, James, Bill Joy, Guy Steele, and Gilad Bracha. 2000. The JavaLanguage Specification. Addison-Wesley Professional.

- 34 Grad, Burton. 2007. “The Creation and the Demise of VisiCalc.” IEEEAnnals of the History of Computing 29 (3): 20–31.https://doi.org/10.1109/MAHC.2007.4338439.

- 35 Green, T. R. G., and M. Petre. 1996. “Usability Analysis of VisualProgramming Environments: A ‘Cognitive Dimensions’ Framework.” JOURNALOF VISUAL LANGUAGES AND COMPUTING 7: 131–74.

- 36 Gulwani, Sumit, William R Harris, and Rishabh Singh. 2012. “SpreadsheetData Manipulation Using Examples.” Communications of the ACM 55 (8):97–105.

- 37 Hancock, C., and M. Resnick. 2003. “Real-Time Programming and the BigIdeas of Computational Literacy.” PhD thesis, Massachusetts Institute ofTechnology. https://dspace.mit.edu/handle/1721.1/61549.

- 38 Hempel, Brian, Justin Lubin, and Ravi Chugh. 2019.“Sketch-n-Sketch: Output-DirectedProgramming for SVG.” In Proceedings of the 32nd Annual ACM Symposiumon User Interface Software and Technology, 281–92. UIST ’19. New York,NY, USA: Association for Computing Machinery.https://doi.org/10.1145/3332165.3347925.

- 39 Hempel, Brian, and Roly Perera. 2020. “LIVE Workshop.” 2020.https://liveprog.org/live-2020/.

- 40 Hempel, Brian, and Sam Lau. 2021. “LIVE Workshop.” 2021.https://liveprog.org/live-2021/.

- 41 Hirschfeld, Robert, Hidehiko Masuhara, and Kim Rose, eds. 2010.Workshop on Self-Sustaining Systems, S3 2010, Tokyo, Japan, September27-28, 2010. ACM. https://doi.org/10.1145/1942793.

- 42 Hirschfeld, Robert, and Kim Rose, eds. 2008. Self-Sustaining Systems,First Workshop, S3 2008, Potsdam, Germany, May 15-16, 2008, RevisedSelected Papers. Vol. 5146. Lecture Notes in Computer Science.Springer. https://doi.org/10.1007/978-3-540-89275-5.

- 43 Ingalls, Daniel. 1981. “Design Principles Behind Smalltalk.” 1981.https://archive.org/details/byte-magazine-1981-08/page/n299/mode/2up.

- 44 Kay, A., and A. Goldberg. 1977. “Personal Dynamic Media.” Computer 10(3): 31–41. https://doi.org/10.1109/C-M.1977.217672.

- 45 Kell, Stephen. 2009. “The Mythical Matched Modules: Overcoming theTyranny of Inflexible Software Construction.” In OOPSLA Companion.

- 46 Kell, Stephen. 2013. “The Operating System: Should There Be One?” In Proceedingsof the Seventh Workshop on Programming Languages and Operating Systems.PLOS ’13. New York, NY, USA: Association for Computing Machinery.https://doi.org/10.1145/2525528.2525534.

- 47 Kell, Stephen. 2017. “Some Were Meant for C: The Endurance of an UnmanageableLanguage.” In Proceedings of the 2017 ACM SIGPLAN InternationalSymposium on New Ideas, New Paradigms, and Reflections on Programmingand Software, 229–45. Onward! 2017. New York, NY, USA: Association forComputing Machinery. https://doi.org/10.1145/3133850.3133867.

- 48 Kiczales, Gregor, Erik Hilsdale, Jim Hugunin, Mik Kersten, Jeffrey Palm,and William G. Griswold. 2001. “An Overview of AspectJ.” In ECOOP2001 - Object-Oriented Programming, 15th European Conference, Budapest,Hungary, June 18-22, 2001, Proceedings, edited by Jørgen LindskovKnudsen, 2072:327–53. Lecture Notes in Computer Science. Springer.https://doi.org/10.1007/3-540-45337-7\\18.

- 49 Klein, Ursula. 2003. Experiments, Models, Paper Tools: Cultures ofOrganic Chemistry in the Nineteenth Century. Stanford, CA: StanfordUniversity Press. http://www.sup.org/books/title/?id=1917.

- 50 Klokmose, Clemens N., James R. Eagan, Siemen Baader, Wendy Mackay, andMichel Beaudouin-Lafon. 2015. “Webstrates: Shareable Dynamic Media.” InProceedings of the 28th Annual ACM Symposium on User Interface Software& Technology, 280–90. UIST ’15. New York, NY, USA: Association forComputing Machinery. https://doi.org/10.1145/2807442.2807446.

- 51 Kluyver, Thomas, Benjamin Ragan-Kelley, Fernando Pérez, Brian Granger,Matthias Bussonnier, Jonathan Frederic, Kyle Kelley, et al. n.d.“Jupyter Notebooks—a Publishing Format for Reproducible ComputationalWorkflows.” Positioning and Power in Academic Publishing: Players,Agents and Agendas, 87.

- 52 Knuth, Donald Ervin. 1984. “Literate Programming.” The ComputerJournal 27 (2): 97–111.

- 53 Kodosky, Jeffrey. 2020. “LabVIEW.” Proc. ACM Program. Lang. 4 (HOPL).https://doi.org/10.1145/3386328.

- 54 Kuhn, Thomas S. 1970. University of Chicago Press.

- 55 Kumar, Ranjitha, and Michael Nebeling. 2021. “UIST 2021 - Author Guide.”2021. https://uist.acm.org/uist2021/author-guide.html.

- 56 Lehman, Meir M. 1980. “Programs, Life Cycles, and Laws of SoftwareEvolution.” Proceedings of the IEEE 68 (9): 1060–76.

- 57 Levy, Steven. 1984. Hackers: Heroes of the Computer Revolution. USA:Doubleday.

- 58 Lieberman, H. 2001. Your Wish Is My Command:Programming by Example. Morgan Kaufmann.

- 59 Litt, Geoffrey, Daniel Jackson, Tyler Millis, and Jessica Quaye. 2020.“End-User Software Customization by Direct Manipulation of TabularData.” In Proceedings of the 2020 ACM SIGPLAN International Symposiumon New Ideas, New Paradigms, and Reflections on Programming andSoftware, 18–33. Onward! 2020. New York, NY, USA: Association forComputing Machinery. https://doi.org/10.1145/3426428.3426914.

- 60 Marlow, Simon, and Simon Peyton-Jones. 2012. The Glasgow HaskellCompiler. Edited by A. Brown and G. Wilson. The Architecture of OpenSource Applications. CreativeCommons. http://www.aosabook.org.

- 61 McCarthy, John. 1962. LISP 1.5 Programmer’s Manual. The MIT Press.

- 62 Meyerovich, Leo A., and Ariel S. Rabkin. 2012. “Socio-PLT: Principlesfor Programming Language Adoption.” In Proceedings of the ACMInternational Symposium on New Ideas, New Paradigms, and Reflections onProgramming and Software, 39–54. Onward! 2012. New York, NY, USA:Association for Computing Machinery.https://doi.org/10.1145/2384592.2384597.

- 63 Michel, Stephen L. 1989. Hypercard: The Complete Reference. Berkeley:Osborne McGraw-Hill.

- 64 Microsoft. 2022. “Language Server Protocol.”https://microsoft.github.io/language-server-protocol/.

- 65 Monperrus, Martin. 2017. “Principles of Antifragile Software.” InCompanion to the First International Conference on the Art, Science andEngineering of Programming. Programming ’17. New York, NY, USA:Association for Computing Machinery.https://doi.org/10.1145/3079368.3079412.

- 66 Nelson, T. H. 1965. “Complex Information Processing: A File Structurefor the Complex, the Changing and the Indeterminate.” In Proceedings ofthe 1965 20th National Conference, 84–100. ACM ’65. New York, NY, USA:Association for Computing Machinery.https://doi.org/10.1145/800197.806036.

- 67 Noble, James, and Robert Biddle. 2004. “Notes on Notes on PostmodernProgramming.” SIGPLAN Not. 39 (12): 40–56.https://doi.org/10.1145/1052883.1052890.

- 68 Norman, Donald A. 2002. The Design of Everyday Things. USA: BasicBooks, Inc.

- 69 Olsen, Dan R. 2007. “Evaluating User Interface Systems Research.” InProceedings of the 20th Annual ACM Symposium on User Interface Softwareand Technology, 251–58. UIST ’07. New York, NY, USA: Association forComputing Machinery. https://doi.org/10.1145/1294211.1294256.

- 70 Omar, Cyrus, Ian Voysey, Michael Hilton, Joshua Sunshine, Claire LeGoues, Jonathan Aldrich, and Matthew A. Hammer. 2017.“Toward Semantic Foundations for ProgramEditors.” In 2nd Summit on Advances in Programming Languages(SNAPL 2017), edited by Benjamin S. Lerner, Rastislav Bodík, andShriram Krishnamurthi, 71:11:1–12. Leibniz International Proceedings inInformatics (LIPIcs). Dagstuhl, Germany: SchlossDagstuhl–Leibniz-Zentrum fuer Informatik.https://doi.org/10.4230/LIPIcs.SNAPL.2017.11.

- 71 Parnas, David Lorge. 1985. “Software Aspects of Strategic DefenseSystems.” http://web.stanford.edu/class/cs99r/readings/parnas1.pdf.

- 72 Pawson, Richard. 2004. “Naked Objects.” PhD thesis, Trinity College,University of Dublin.

- 73 Perlis, A. J., and K. Samelson. 1958. “Preliminary Report: InternationalAlgebraic Language.” Commun. ACM 1 (12): 8–22.https://doi.org/10.1145/377924.594925.

- 74 Petit Emmanuel J. 2013. Irony: Irony or, the Self-Critical Opacity of Postmodern Architecture. Yale University Press

- 75 Petricek, Tomas, and Joel Jakubovic. 2021. “Complementary Science ofInteractive Programming Systems.” In History and Philosophy ofComputing.

- 76 Petricek, Tomas. 2017. “Miscomputation in Software: Learning to Livewith Errors.” Art Sci. Eng. Program. 1 (2): 14.https://doi.org/10.22152/programming-journal.org/2017/1/14.

- 77 Piumarta, Ian. 2006. “Accessible Language-Based Environments ofRecursive Theories.” 2006.http://www.vpri.org/pdf/rn2006001a_colaswp.pdf.

- 78 Raymond, Eric S., and Bob Young. 2001. The Cathedral & the Bazaar:Musings on Linux and Open Source by an Accidental Revolutionary.O’Reilly.

- 79 Reason, James. 1990. Human Error. Cambridge university press.

- 80 Ryder, Barbara G., Mary Lou Soffa, and Margaret Burnett. 2005. “TheImpact of Software Engineering Research on Modern ProgrammingLanguages.” ACM Trans. Softw. Eng. Methodol. 14 (4): 431–77.https://doi.org/10.1145/1101815.1101818.

- 81 Shneiderman. 1983. “Direct Manipulation: A Step Beyond ProgrammingLanguages.” Computer 16 (8): 57–69.https://doi.org/10.1109/MC.1983.1654471.

- 82 Sitaker, Kragen Javier. 2016. “The Memory Models That UnderlieProgramming Languages.” 2016.http://canonical.org/~kragen/memory-models/.

- 83 Smaragdakis, Yannis. 2019. “Next-Paradigm Programming Languages: WhatWill They Look Like and What Changes Will They Bring?” In Proceedingsof the 2019 ACM SIGPLAN International Symposium on New Ideas, NewParadigms, and Reflections on Programming and Software, 187–97. Onward!2019. New York, NY, USA: Association for Computing Machinery.https://doi.org/10.1145/3359591.3359739.

- 84 Smith, Brian Cantwell. 1982. “Procedural Reflection in ProgrammingLanguages.” PhD thesis, Massachusetts Institute of Technology.https://dspace.mit.edu/handle/1721.1/15961.

- 85 Smith, D. C. 1975. “Pygmalion: A Creative Programming Environment.” In.

- 86 Steele, G., and S. E. Fahlman. 1990. Common LISP: The Language. HPTechnologies. Elsevier Science.https://books.google.cz/books?id=FYoOIWuoXUIC.

- 87 Steele, Guy L., and Richard P. Gabriel. 1993. “The Evolution of Lisp.”In The Second ACM SIGPLAN Conference on History of ProgrammingLanguages, 231–70. HOPL-II. New York, NY, USA: Association forComputing Machinery. https://doi.org/10.1145/154766.155373.

- 88 Tanimoto, Steven L. 2013. “A Perspective on the Evolution of LiveProgramming.” In Proceedings of the 1st International Workshop on LiveProgramming, 31–34. LIVE ’13. San Francisco, California: IEEE Press.

- 89 Tchernavskij, Philip. 2019. “Designing and Programming MalleableSoftware.” PhD thesis, Université Paris-Saclay, École doctorale nº580Sciences et Technologies de l’Information et de la Communication (STIC).

- 90 Teitelman, Warren. 1966. “PILOT: A Step Toward Man-Computer Symbiosis.”PhD thesis, MIT.

- 91 Teitelman, Warren. 1974. “Interlisp Reference Manual.” Xerox PARC. http://www.bitsavers.org/pdf/xerox/interlisp/Interlisp_Reference_Manual_1974.pdf

- 92 Ungar, David, and Randall B. Smith. 2013. “The Thing on the Screen IsSupposed to Be the Actual Thing.” 2013.http://davidungar.net/Live2013/Live_2013.html.

- 93 Victor, Bret. 2012. “Learnable Programming.” 2012.http://worrydream.com/#!/LearnableProgramming.

- 94 Wall, Larry. 1999. “Perl, the First Postmodern Computer Language.” 1999.http://www.wall.org//~larry/pm.html.

- 95 Winestock, Rudolf. 2011. “The Lisp Curse.” 2011.http://www.winestockwebdesign.com/Essays/Lisp_Curse.html.

- 96 Wolfram, Stephen. 1991. Mathematica: A System for Doing Mathematics byComputer (2nd Ed.). USA: Addison Wesley Longman Publishing Co., Inc.

- 97 Zynda, Melissa Rodriguez. 2013. “The First Killer App: A History ofSpreadsheets.” Interactions 20 (5): 68–72.https://doi.org/10.1145/2509224.

- 98 desRivieres, J., and J. Wiegand. 2004. “Eclipse: A Platform forIntegrating Development Tools.” IBM Systems Journal 43 (2): 371–83.https://doi.org/10.1147/sj.432.0371.

- 99 diSessa, A. A, and H. Abelson. 1986. “Boxer: A ReconstructibleComputational Medium.” Commun. ACM 29 (9): 859–68.https://doi.org/10.1145/6592.6595.

- 100 diSessa, Andrea A. 1985. “A Principled Design for an IntegratedComputational Environment.” Human–Computer Interaction 1 (1): 1–47.https://doi.org/10.1207/s15327051hci0101\\1.

- 101 repl.it. 2022. “Replit: The Collaborative Browser-Based IDE.”https://replit.com.

- 102 team, Dark language. 2022. “Dark Lang.” https://darklang.com.

Technical dimensions of programming systems

Abstract

Programming requires much more than just writing code in a programming language. It is usually done in the context of a stateful environment, by interacting with a system through a graphical user interface. Yet, this wide space of possibilities lacks a common structure for navigation. Work on programming systems fails to form a coherent body of research, making it hard to improve on past work and advance the state of the art.

In computer science, much has been said and done to allow comparison of programming languages, yet no similar theory exists for programming systems; we believe that programming systems deserve a theory too. We present a framework of technical dimensions which capture the underlying characteristics of programming systems and provide a means for conceptualizing and comparing them.

We identify technical dimensions by examining past influential programming systems and reviewing their design principles, technical capabilities, and styles of user interaction. Technical dimensions capture characteristics that may be studied, compared and advanced independently. This makes it possible to talk about programming systems in a way that can be shared and constructively debated rather than relying solely on personal impressions.

Our framework is derived using a qualitative analysis of past programming systems. We outline two concrete ways of using our framework. First, we show how it can analyze a recently developed novel programming system. Then, we use it to identify an interesting unexplored point in the design space of programming systems.

Much research effort focuses on building programming systems that are easier to use, accessible to non-experts, moldable and/or powerful, but such efforts are disconnected. They are informal, guided by the personal vision of their authors and thus are only evaluable and comparable on the basis of individual experience using them. By providing foundations for more systematic research, we can help programming systems researchers to stand, at last, on the shoulders of giants.

Table of contents

Introduction

Related work

Programming systems

Evaluation

Conclusions

Appendices

A systematic presentation removes ideas from the ground that made them grow and arranges them in an artificial pattern.

Paul Feyerabend, The Tyranny of Science 20

Irony is said to allow the artist to continue his creative production while immersed in a sociocultural context of which he is critical.

Emmanuel Petit, Irony; or, the Self-Critical Opacity of Postmodernist Architecture 74

Introduction

Many forms of software have been developed to enable programming. The classic form consists of a programming language, a text editor to enter source code, and a compiler to turn it into an executable program. Instances of this form are differentiated by the syntax and semantics of the language, along with the implementation techniques in the compiler or runtime environment. Since the advent of graphical user interfaces (GUIs), programming languages can be found embedded within graphical environments that increasingly define how programmers work with the language—for instance, by directly supporting debugging or refactoring. Beyond this, the rise of GUIs also permits diverse visual forms of programming, including visual languages and GUI-based end-user programming tools.

This paper advocates a shift of attention from programming languages to the more general notion of "software that enables programming"---in other words, programming systems.

Definition: Programming system. A programming system is an integrated and complete set of tools sufficient for creating, modifying, and executing programs. These will include notations for structuring programs and data, facilities for running and debugging programs, and interfaces for performing all of these tasks. Facilities for testing, analysis, packaging, or version control may also be present. Notations include programming languages and interfaces include text editors, but are not limited to these.

This notion covers classic programming languages together with their editors, debuggers, compilers, and other tools. Yet it is intentionally broad enough to accommodate image-based programming environments like Smalltalk, operating systems like UNIX, and hypermedia authoring systems like Hypercard, in addition to various other examples we will mention.

The problem: no systematic framework for systems

There is a growing interest in broader forms of programming systems, both in the programming research community and in industry. Researchers are studying topics such as programming experience and live programming that require considering not just the language, but further aspects of a given system. At the same time, commercial companies are building new programming environments like Replit 101 or low-code tools like Dark 102 and Glide. 31 Yet, such topics remain at the sidelines of mainstream programming research. While programming languages are a well-established concept, analysed and compared in a common vocabulary, no similar foundation exists for the wider range of programming systems.

The academic research on programming suffers from this lack of common vocabulary. While we may thoroughly assess programming languages, as soon as we add interaction or graphics into the picture, evaluation beyond subjective "coolness" becomes fraught with difficulty. The same difficulty in the context of user interface systems has been analyzed by Olsen. 69 Moreover, when designing new systems, inspiration is often drawn from the same few standalone sources of ideas. These might be influential past systems like Smalltalk, programmable end-user applications like spreadsheets, or motivational illustrations like those of Bret Victor. 93

Instead of forming a solid body of work, the ideas that emerge are difficult to relate to each other. The research methods used to study programming systems lack the rigorous structure of programming language research methods. They tend to rely on singleton examples, which demonstrate their author's ideas, but are inadequate methods for comparing new ideas with the work of others. This makes it hard to build on top and thereby advance the state of the art.

Studying programming systems is not merely about taking a programming language and looking at the tools that surround it. It presents a paradigm shift to a perspective that is, at least partly, incommensurable with that of languages. When studying programming languages, everything that matters is in the program code; when studying programming systems, everything that matters is in the interaction between the programmer and the system. As documented by Gabriel, 29 looking at a system from a language perspective makes it impossible to think about concepts that arise from interaction with a system, but are not reflected in the language. Thus, we must proceed with some caution. As we will see, when we talk about Lisp as a programming system, we mean something very different from a parenthesis-heavy programming language!

Contributions

We propose a common language as an initial step towards a more progressive research on programming systems. Our set of technical dimensions seeks to break down the holistic view of systems along various specific "axes". The dimensions identify a range of possible design choices, characterized by two extreme points in the design space. They are not quantitative, but they allow comparison by locating systems on a common axis. We do not intend for the extreme points to represent "good" or "bad" designs; we expect any position to be a result of design trade-offs. At this early stage in the life of such a framework, we encourage agreement on descriptions of systems first in order to settle any normative judgements later.

The set of dimensions can be understood as a map of the design space of programming systems (see diagram). Past and present systems will serve as landmarks, and with enough of them, we may reveal unexplored or overlooked possibilities. So far, the field has not been able to establish a virtuous cycle of feedback; it is hard for practitioners to situate their work in the context of others' so that subsequent work can improve on it. Our aim is to provide foundations for the study of programming systems that would allow such development.

Main contributions

The main contributions of this project are organized as follows:

- In Programming systems, we characterize what a programming system is and review landmark programming systems of the past that are used as examples throughout this paper, as well as to delineate our notion of a programming system.

- The Catalogue of technical dimensions presents the dimensions in detail, organised into related clusters: interaction, notation, conceptual structure, customizability, complexity, errors, and adoptability. For each dimension, we give examples that illustrate the range of values along its axis.

- In Evaluation, we sketch two ways of using the technical dimensions framework. We first use it to evaluate a recent interesting programming system Dark and then use it to identify an unexplored point in the design space and envision a potential novel programming system.

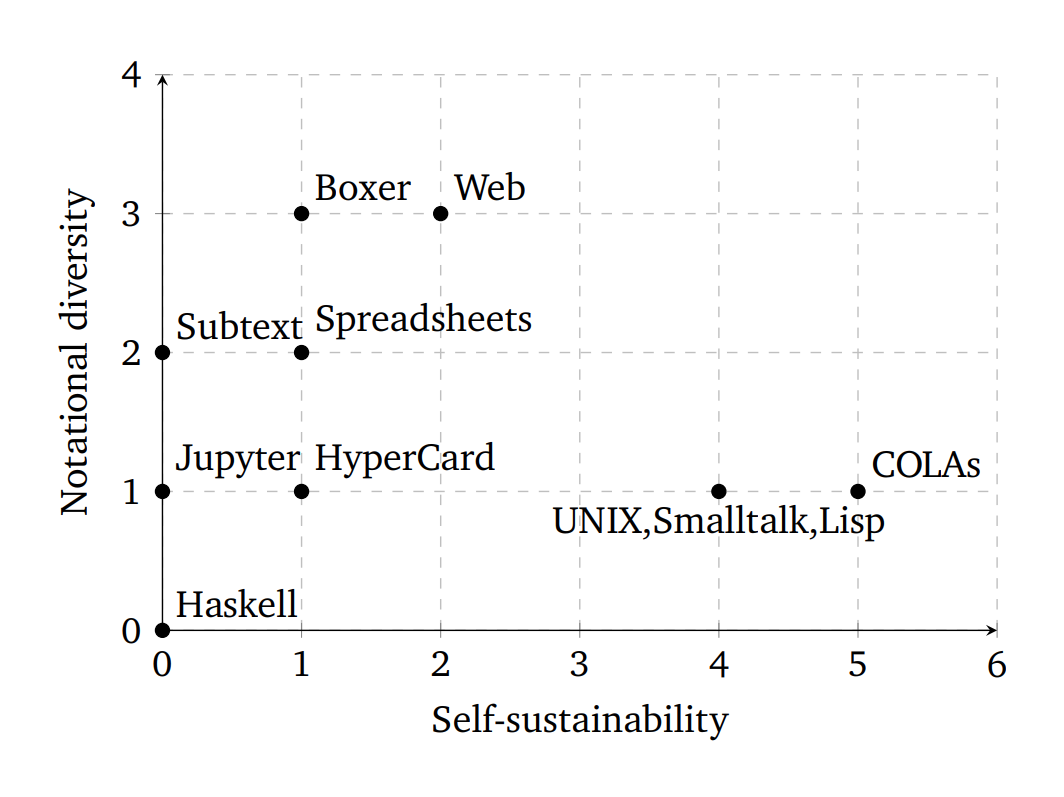

Programming systems positioned in a common two-dimensional space. One 2-dimensional slice of the space of possible systems, examined in more detail in Exploring the design space, showing a set of example programming systems (or system families) measured against self-sustainability and notational diversity, revealing an absence of systems with a high degree of both.

The numerical scores on each axis are generated systematically by a method described in Making dimensions quantitative. While these results are plausible, they are not definitive as the method could be developed a lot further in future work (see Remarks and future work).

Related work

While we do have new ideas to propose, part of our contribution is integrating a wide range of existing concepts under a common umbrella. This work is spread out across different domains, but each part connects to programming systems or focuses on a specific characteristic they may have.

From languages to systems

Our approach lies between a narrow focus on programming languages and a broad focus on programming as a socio-political and cultural subject. Our concept of a programming system is technical in scope, although we acknowledge the technical side often has important social implications as in the case of the Adoptability dimension. This contrasts with the more socio-political focus found in Tchernavskij 89 or in software studies 26. It overlaps with Kell's conceptualization of UNIX, Smalltalk, and Operating Systems generally, 46 and we have ensured that UNIX has a place in our framework.

The distinction between more narrow programming languages and broader programming systems is more subtle. Richard Gabriel noted an invisible paradigm shift from the study of "systems" to the study of "languages" in computer science, 29 and this observation informs our distinction here. One consequence of the change is that a language is often formally specified apart from any specific implementations, while systems resist formal specification and are often defined by an implementation. We recognize programming language implementations as a small region of the space of possible systems, at least as far as interaction and notations might go. Hence we refer to the interactive programming system aspects of languages, such as text editing and command-line workflow.

Programming systems research

There is renewed interest in programming systems in the form of non-traditional programming tools:

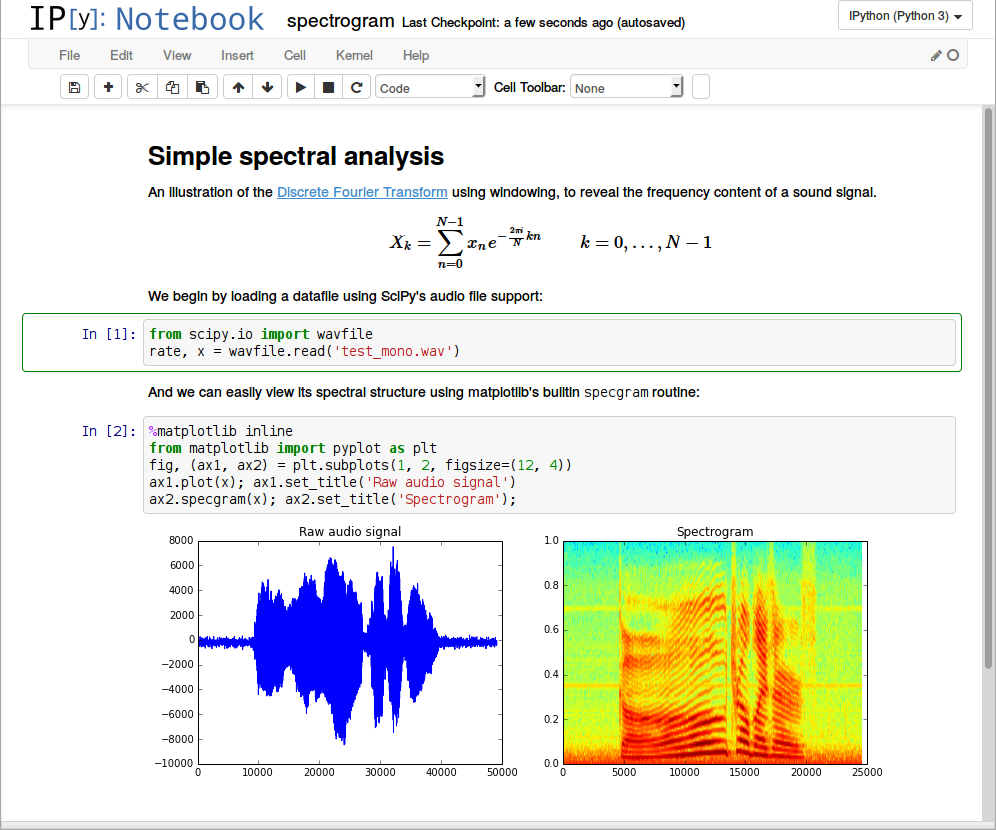

- Computational notebooks such as Jupyter 51 facilitate data analysis by combining code snippets with text and visual output. They are backed by stateful "kernels" and used interactively.

- "Low code" end-user programming systems allow application development (mostly) through a GUI. One example is Coda, 14 which combines tables, formulas, and scripts to enable non-technical people to build "applications as documents".

- Domain-specific programming systems such as Dark, 102 which claims a "holistic" programming experience for cloud API services. This includes a language, a direct manipulation editor, and near-instantaneous building and deployment.

- Even for general purpose programming with conventional tools, systems like Replit 101 have demonstrated the benefits of integrating all needed languages, tools, and user interfaces into a seamless experience, available from the browser, that requires no setup.

Research that follows the programming systems perspective can be found in a number of research venues. Those include Human-Computer Interaction conferences such as UIST (ACM Symposium on User Interface Software and Technology) and VL/HCC (IEEE Symposium on Visual Languages and Human-Centric Computing). However, work in those often emphasizes the user experience over technical description. Programming systems are often presented in workshops such as LIVE and PX (Programming eXperience). However, work in those venues is often limited to the authors' individual perspectives and suffers from the aforementioned difficulty of comparing to other systems.

Concrete examples of systems appear throughout the paper. Recent systems which motivated some of our dimensions include Subtext, 18 which combines code with its live execution in a single editable representation; Sketch-n-sketch, 38 which can synthesize code by direct manipulation of its outputs; Hazel, 70 a live functional programming environment with typed holes to enable execution of incomplete or ill-typed programs; and Webstrates, 50 which extends Web pages with real-time sharing of state.

Already-known characteristics

There are several existing projects identifying characteristics of programming systems. Some revolve around a single one, such as levels of liveness, 88 or plurality and communicativity. 47 Others propose an entire collection. Memory Models of Programming Languages 82 identifies the "everything is an X" metaphors underlying many programming languages; the Design Principles of Smalltalk 43 documents the philosophical goals and dicta used in the design of Smalltalk; the "Gang of Four" Design Patterns 30 catalogues specific implementation tactics; and the Cognitive Dimensions of Notation 35 introduces a common vocabulary for software's notational surface and for identifying their trade-offs.

The latter two directly influence our proposal. Firstly, the Cognitive Dimensions are a set of qualitative properties which can be used to analyze notations. We are extending this approach to the "rest" of a system, beyond its notation, with Technical Dimensions. Secondly, our individual dimensions naturally fall under larger clusters that we present in a regular format, similar to the presentation of the classic Design Patterns. As for characteristics identified by others, part of our contribution is to integrate them under a common umbrella: the existing concepts of liveness, pluralism, and uniformity metaphors ("everything is an X") become dimensions in our framework.

Methodology

We follow the attitude of Evaluating Programming Systems 16 in distinguishing our work from HCI methods and empirical evaluation. We are generally concerned with characteristics that are not obviously amenable to statistical analysis (e.g. mining software repositories) or experimental methods like controlled user studies, so numerical quantities are generally not featured.

Similar development seems to be taking place in HCI research focused on user interfaces. The UIST guidelines 55 instruct authors to evaluate system contributions holistically, and the community has developed heuristics for such evaluation, such as Evaluating User Interface Systems Research. 69 Our set of dimensions offers similar heuristics for identifying interesting aspects of programming systems, though they focus more on underlying technical properties than the surface interface.

Finally, we believe that the aforementioned paradigm shift from programming systems to programming languages has hidden many ideas about programming that are worthwhile recovering and developing further. 75 Thus our approach is related to the idea of complementary science developed by Chang 10 in the context of history and philosophy of science. Chang argues that even in disciplines like physics, superseded or falsified theories may still contain interesting ideas worth documenting. In the field of programming, where past systems are discarded for many reasons besides empirical failure, Chang's complementary science approach seems particularly suitable.

Programming systems deserve a theory too!

In short, while there is a theory for programming languages, programming systems deserve a theory too. It should apply from the small scale of language implementations to the vast scale of operating systems. It should be possible to analyse the common and unique features of different systems, to reveal new possibilities, and to build on past work in an effective manner. In Kuhnian terms, 54 it should enable a body of "normal science": filling in the map of the space of possible systems, thereby forming a knowledge repository for future designers.

Programming systems

We introduce the notion of a programming system through a number of example systems. We draw them from three broad reference classes:

Software ecosystems built around a text-based programming language. They consist of a set of tools such as compilers, debuggers, and profilers. These tools may exist as separate command-line programs, or within an Integrated Development Environment.

Those that resemble an operating system (OS) in that they structure the execution environment and encompass the resources of an entire machine (physical or virtual). They provide a common interface for communication, both between the user and the computer, and between programs themselves.

Programmable applications, typically optimized for a specific domain, offering a limited degree of programmability which may be increased with newer versions.

It must be noted that our selection of systems is not meant to be exhaustive; there will be many past and present systems that we are not aware of or do not know much about, and we obviously cannot cover programming systems that have not been created yet. With that caveat, we will proceed to detail some systems under the above grouping. This will provide an intuition for the notion of a programming system and establish a collection of go-to examples for the rest of the paper.

Systems based around languages

Text-based programming languages sit within programming systems whose boundaries are not explicitly defined. To speak of a programming system, we need to consider a language with, at minimum, an editor and a compiler or interpreter. However, the exact boundaries are a design choice that significantly affects our analysis.

Java with the Eclipse ecosystem

Java 33 cannot be viewed as a programming system on its own, but it is one if we consider it as embedded in an ecosystem of tools. There are multiple ways to delineate this, resulting in different analyses. A minimalistic programming system would consist of a text editor to write Java code and a command line compiler. A more realistic system is Java as embedded in the Eclipse IDE. 98 The programming systems view allows us to see all there is beyond the textual code. In the case of Eclipse, this includes the debugger, refactoring tools, testing and modelling tools, GUI designers, and so on. This delineation yields a programming system that is powerful and convenient, but has a large number of concepts and secondary notations.

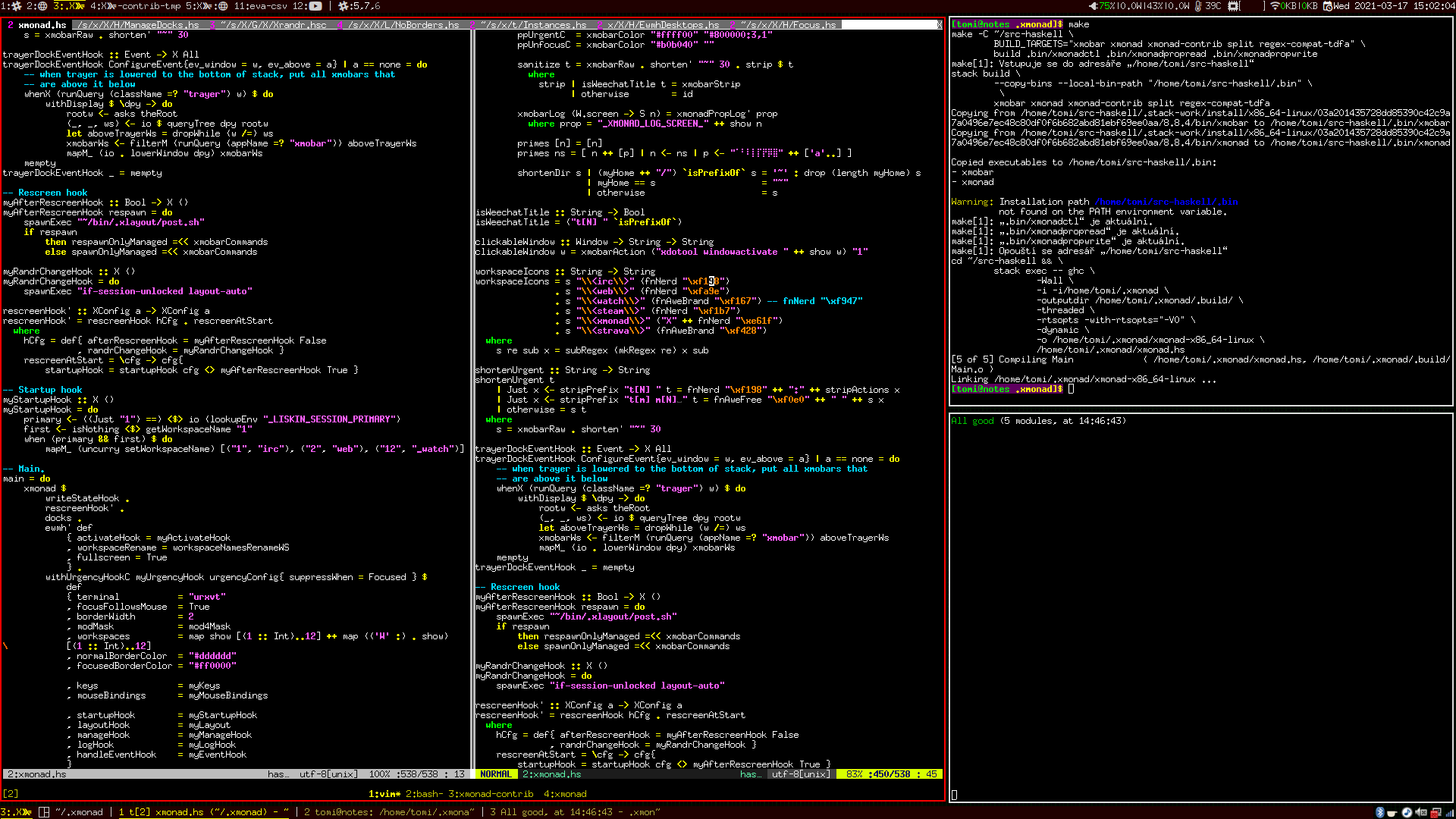

Haskell tools ecosystem

Haskell is an even more language-focused programming system. It is used through the command-line GHC compiler 60 and GHCi REPL, alongside a text editor that provides features like syntax highlighting and auto-completion. Any editor that supports the Language Server Protocol 64 will suffice to complete the programming system.

Haskell is mathematically rooted and relies on mathematical intuition for understanding many of its concepts. This background is also reflected in the notations it uses. In addition to the concrete language syntax for writing code, the ecosystem also uses an informal mathematical notation for writing about Haskell (e.g. in academic papers or on the whiteboard). This provides an additional tool for manipulating Haskell programs. Experiments on paper can provide a kind of rapid feedback that other systems may provide through live programming.

From REPLs to notebooks

A different kind of developer ecosystem that evolved around a programming language is the Jupyter notebook platform. 51 In Jupyter, data scientists write scripts divided into notebook cells, execute them interactively and see the resulting data and visualizations directly in the notebook itself. This brings together the REPL, which dates back to conversational implementations of Lisp in the 1960s, with literate programming 52 used in the late 1980s in Mathematica 1.0. 96

As a programming system, Jupyter has a number of interesting characteristics. The primary outcome of programming is the notebook itself, rather than a separate application to be compiled and run. The code lives in a document format, interleaved with other notations. Code is written in small parts that are executed quickly, offering the user more rapid feedback than in conventional programming. A notebook can be seen as a trace of how the result has been obtained, yet one often problematic feature of notebooks is that some allow the user to run code blocks out-of-order. The code manipulates mutable state that exists in a "kernel" running in the background. Thus, retracing one's steps in a notebook is more subtle than in, say, Common Lisp, 86 where the dribble function would directly record the user's session to a file.

OS-like programming systems

"OS-likes" begin from the 1960s when it became possible to interact one-on-one with a computer. First, time-sharing systems enabled interactive shared use of a computer via a teletype; smaller computers such as the PDP-1 and PDP-8 provided similar direct interaction, while 1970s workstations such as the Alto and Lisp Machines added graphical displays and mouse input. These OS-like systems stand out as having the totalising scope of operating systems, whether or not they are ordinarily seen as taking this role.

MacLisp and Interlisp

LISP 1.5 61 arrived before the rise of interactive computers, but the existence of an interpreter and the absence of declarations made it natural to use Lisp interactively, with the first such implementations appearing in the early 1960s. Two branches of the Lisp family, 87 MacLisp and the later Interlisp, embraced the interactive "conversational" way of working, first through a teletype and later using the screen and keyboard.

Both MacLisp and Interlisp adopted the idea of persistent address space. Both program code and program state were preserved when powering off the system, and could be accessed and modified interactively as well as programmatically using the same means. Lisp Machines embraced the idea that the machine runs continually and saves the state to disk when needed. Today, this is widely seen in cloud-based services like Google Docs and online IDEs. Another idea pioneered in MacLisp and Interlisp was the use of structure editors. These let programmers work with Lisp data structures not as sequences of characters, but as nested lists. In Interlisp, the programmer would use commands such as *P to print the current expression, or *(2 (X Y)) to replace its second element with the argument (X Y). The PILOT system 90 offered even more sophisticated conversational features. For typographical errors and other slips, it would offer an automatic fix for the user to interactively accept, modifying the program in memory and resuming execution.

Smalltalk

Smalltalk appeared in the 1970s with a distinct ambition of providing "dynamic media which can be used by human beings of all ages". 44 The authors saw computers as meta-media that could become a range of other media for education, discourse, creative arts, simulation and other applications not yet invented. Smalltalk was designed for single-user workstations with a graphical display, and pioneered this display not just for applications but also for programming itself. In Smalltalk 72, one wrote code in the bottom half of the screen using a structure editor controlled by a mouse, and menus to edit definitions. In Smalltalk-76 and later, this had switched to text editing embedded in a class browser for navigating through classes and their methods.

Similarly to Lisp systems, Smalltalk adopts the persistent address space model of programming where all objects remain in memory, but based on objects and message passing rather than lists. Any changes made to the system state by programming or execution are preserved when the computer is turned off. Lastly, the fact that much of the Smalltalk environment is implemented in itself makes it possible to extensively modify the system from within.

We include Lisp and Smalltalk in the OS-likes because they function as operating systems in many ways. On specialized machines, like the Xerox Alto and Lisp machines, the user started their machine directly in the Lisp or Smalltalk environment and was able to do everything they needed from within the system. Nowadays, however, this experience is associated with UNIX and its descendants on a vast range of commodity machines.

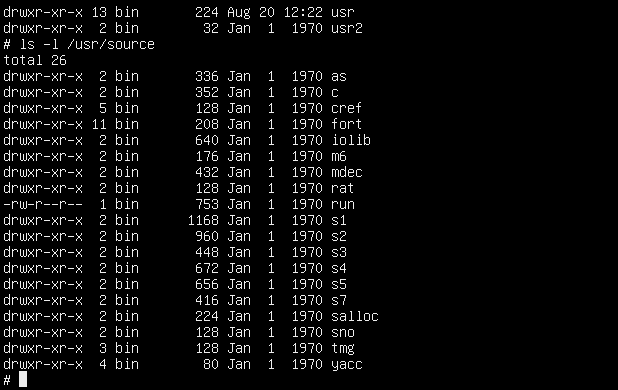

UNIX

UNIX illustrates the fact that many aspects of programming systems are shaped by their intended target audience. Built for computer hackers, 57 its abstractions and interface are close to the machine. Although historically linked to the C language, UNIX developed a language-agnostic set of abstractions that make it possible to use multiple programming languages in a single system. While everything is an object in Smalltalk, the ontology of the UNIX system consists of files, memory, executable programs, and running processes. Note the explicit "stage" distinction here: UNIX distinguishes between volatile memory structures, which are lost when the system is shut down, and non-volatile disk structures that are preserved. This distinction between types of memory is considered, by Lisp and Smalltalk, to be an implementation detail to be abstracted over by their persistent address space. Still, this did not prevent the UNIX ontology from supporting a pluralistic ecosystem of different languages and tools.

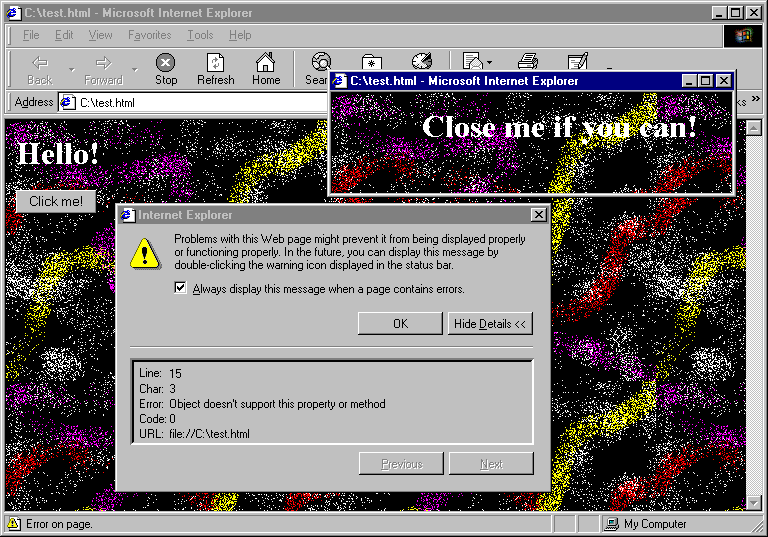

Early and modern Web

The Web evolved 1 from a system for sharing and organizing information to a programming system. Today, it consists of a wide range of server-side programming tools, JavaScript and languages that compile to it, and notations like HTML and CSS. As a programming system, the "modern 2020s web" is reasonably distinct from the "early 1990s web". In the early web, JavaScript code was distributed in a form that made it easy to copy and re-use existing scripts, which led to enthusiastic adoption by non-experts—recalling the birth of microcomputers like Commodore 64 with BASIC a decade earlier.

In the "modern web", multiple programming languages treat JavaScript as a compilation target, and JavaScript is also used as a language on the server-side. This web is no longer simple enough to encourage copy-and-paste remixing of code from different sites. However, it does come with advanced developer tools that provide functionality resembling early interactive programming systems like Lisp and Smalltalk. The Document Object Model (DOM) structure created by a web page is transparent, accessible to the user and modifiable through the built-in browser inspector tools. Third-party code to modify the DOM can be injected via extensions. The DOM almost resembles the tree/graph model of Smalltalk and Lisp images, lacking the key persistence property. This limitation, however, is being addressed by Webstrates. 50

Application-focused systems

The previously discussed programming systems were either universal, not focusing on any particular kind of application, or targeted at broad fields, such as Artificial Intelligence and symbolic data manipulation in Lisp's case. In contrast, the following examples focus on more narrow kinds of applications that need to be built. Many support programming based on rich interactions with specialized visual and textual notations.

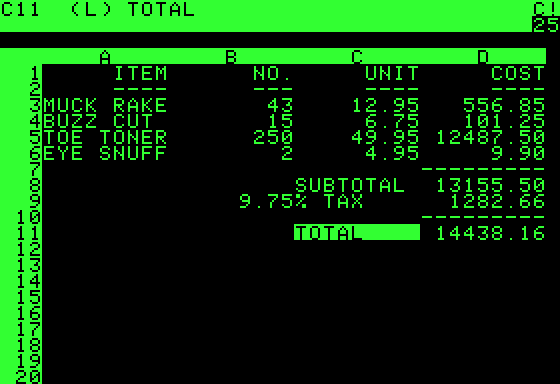

Spreadsheets

The first spreadsheets became available in 1979 in VisiCalc 34 97 and helped analysts perform budget calculations. As programming systems, spreadsheets are notable for their programming substrate (a two-dimensional grid) and evaluation model (automatic re-evaluation). The programmability of spreadsheets developed over time, acquiring features that made them into powerful programming systems in a way VisiCalc was not. The final step was the 1993 inclusion of macros in Excel, later further extended with Visual Basic for Applications.

Graphical "languages"

Efforts to support programming without relying on textual code can only be called "languages" in a metaphorical sense. In these programming systems, programs are made out of graphical structures as in LabView 53 or Programming-By-Example. 58

HyperCard

While spreadsheets were designed to solve problems in a specific application area, HyperCard 63 was designed around a particular application format. Programs are "stacks of cards" containing multimedia components and controls such as buttons. These controls can be programmed with pre-defined operations like "navigate to another card", or via the HyperTalk scripting language for anything more sophisticated.

As a programming system, HyperCard is interesting for a couple of reasons. It effectively combines visual and textual notation. Programs appear the same way during editing as they do during execution. Most notably, HyperCard supports gradual progression from the "user" role to "developer": a user may first use stacks, then go on to edit the visual aspects or choose pre-defined logic until, eventually, they learn to program in HyperTalk.

Evaluation

The technical dimensions should be evaluated on the basis of how useful they are for designing and analysing programming systems. To that end, this section demonstrates two uses of the framework. First, we use the dimensions to analyze the recent programming system Dark, 102 explaining how it relates to past work and how it contributes to the state of the art. Second, we use technical dimensions to identify a new unexplored point in the design space of programming systems and envision a new design that could emerge from the analysis.

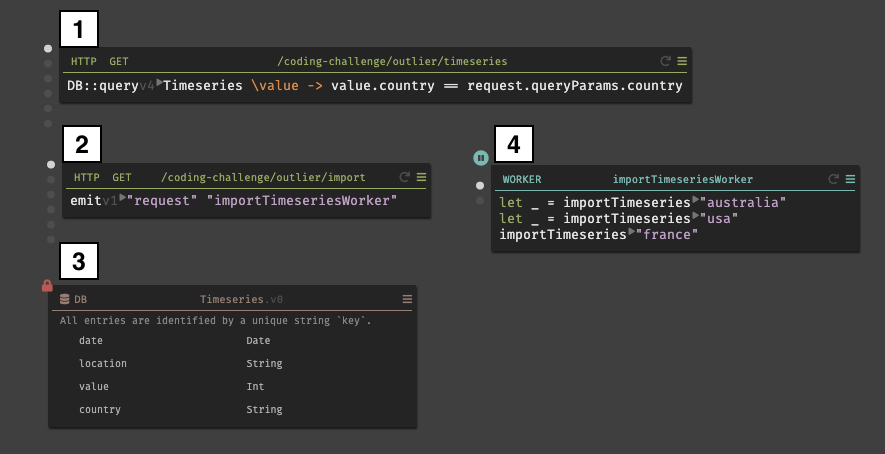

A screenshot of the Dark programming system. The screenshot shows a simple web service in Dark consisting of two HTTP endpoints (1, 2), a database (3), and a worker (4) (image source)

Evaluating the Dark programming system

Dark is a programming system for building "serverless backends", i.e. services that are used by web and mobile applications. It aims to make building such services easier by "removing accidental complexity" (see Goals of Dark v2) resulting from the large number of systems typically involved in their deployment and operation. This includes infrastructure for orchestration, scaling, logging, monitoring and versioning. Dark provides integrated tooling for development and is described as deployless, meaning that deploying code to production is instantaneous.

Dark illustrates the need for the broader perspective of programming systems. Of course, it contains a programming language, which is inspired by OCaml and F#. But Dark's distinguishing feature is that it eliminates the many secondary systems needed for deployment of modern cloud-based services. Those exist outside of a typical programming language, yet form a major part of the complexity of the overall development process.

With technical dimensions, we can go beyond the "sales pitch", look behind the scenes, and better understand the interesting technical aspects of Dark as a programming system. The table below summarises the more detailed analysis that follows. Two clear benefits of such an analysis are:

- It provides a list of topics to investigate when examining a programming system such as Dark.

- It give us a common vocabulary for these topics that can be used to compare Dark with other systems on the same terms.

Positioning Dark in the design space

The following table provides a concise summary of where Dark lies on its distinguishing dimensions. For brevity, dimensions where Dark does not differ from ordinary programming are omitted. A more detailed discussion can be found in the Dimensional analysis of Dark.

| Interaction | |

| Modes of interaction | Single integrated mode comprises development, debugging and operation ("deployless")) |

| Feedback loops | Code editing is triggered either by user or by unsupported HTTP request and changes are deployed automatically, allowing for immediate feedback |

| Errors | |

| Error response | When an unsupported HTTP request is received, programmer can write handler code using data from the request in the process) |

| Conceptual structure | |

| Conceptual integrity versus openness | Abstractions at the domain specific high-level and the functional low-level are both carefully designed for conceptual integrity. |

| Composability | User applications are composed from high-level primitives; the low-level uses composable functional abstractions (records, pipelines).) |

| Convenience | Powerful high-level domain-specific abstractions are provided (HTTP, database, workers); core functional libraries exist for the low-level.) |

| Adoptability | |

| Learnability | High-level concepts will be immediately familiar to the target audience; low-level language has the usual learning curve of basic functional programming) |

| Notation | |

| Notational structure | Graphical notation for high-level concepts is complemented by structure editor for low-level code) |

| Uniformity of notations | Common notational structures are used for database and code, enabling the same editing construct for sequential structures (records, pipelines, tables)) |

| Complexity | |

| Factoring of complexity | Cloud infrastructure (deployment, orchestration, etc.) is provided by the Dark platform that is invisible to the programmer, but also cannot be modified) |

| Level of automation | Current implementation provides basic infrastructure, but a higher degree of automation in the platform can be provided in the future, e.g. for scalability) |

| Customizability | |

| Staging of customization | System can be modified while running and changes are persisted, but they have to be made in the Dark editor, which is distinct from the running service) |

Technical innovations of Dark

This analysis reveals a number of interesting aspects of the Dark programming system. The first is the tight integration of different modes of interaction which collapses a heterogeneous stack of technologies, makes Dark learnable, and allows quick feedback from deployed services. The second is the use of error response to guide the development of HTTP handlers. Thanks to the technical dimensions framework, each of these can be more precisely described. It is also possible to see how they may be supported in other programming systems. The framework also points to possible alternatives (and perhaps improvements) such as building a more self-sustainable system that has similar characteristics to Dark, but allows greater flexibility in modifying the platform from within itself.

Dimensional analysis of Dark

Modes of interaction and feedback loops

Conventional modes of interaction include running, editing and debugging. For modern web services, running refers to operation in a cloud-based environment that typically comes with further kinds of feedback (logging and monitoring). The key design decision of Dark is to integrate all these different modes of interaction into a single one. This tight integration allows Dark to provide a more immediate feedback loop where code changes become immediately available not just to the developer, but also to external users. The integrated mode of interaction is reminiscent of the image-based environment in Smalltalk; Dark advances the state of art by using this model in a multi-user, cloud-based context.

Feedback loops and error response

The integration of development and operation also makes it possible to use errors occurring during operation to drive development. Specifically, when a Dark service receives a request that is not supported, the user can build a handler 13 to provide a response—taking advantage of the live data that was sent as part of the request. In terms of our dimensions, this is a kind of error response that was pioneered by the PILOT system for Lisp. 90 Dark does this not just to respond to errors, but also as the primary development mechanism, which we might call Error-Driven Development. This way, Dark users can construct programs with respect to sample input values.

Conceptual structure and learnability

Dark programs are expressed using high-level concepts that are specific to the domain of server-side web programming: HTTP request handlers, databases, workers and scheduled jobs. These are designed to reduce accidental complexity and aim for high conceptual integrity. At the level of code, Dark uses a general-purpose functional language that emphasizes certain concepts, especially records and pipelines. The high-level concepts contribute to learnability of the system, because they are highly domain-specific and will already be familiar to its intended users.

Notational structure and uniformity

Dark uses a combination of graphical editor and code. The two aspects of the notation follow the complementing notations pattern. The windowed interface is used to work with the high-level concepts and code is used for working with low-level concepts. At the high level, code is structured in freely positionable boxes on a 2D surface. Unlike Boxer, 99 these boxes do not nest and the space cannot be used for other content (say, for comments, architectural illustrations or other media). Code at the low level is manipulated using a syntax-aware structure editor, showing inferred types and computed live values for pure functions. It also provides special editing support for records and pipelines, allowing users to add fields and steps respectively.

Factoring of complexity and automation

One of the advertised goals of Dark is to remove accidental complexity. This is achieved by collapsing the heterogeneous stack of technologies that are typically required for development, cloud deployment, orchestration and operation. Dark hides this via factoring of complexity. The advanced infrastructure is provided by the Dark platform and is hidden from the user. The infrastructure is programmed explicitly and there is no need for sophisticated automation. This factoring of functionality that was previously coded manually follows a similar pattern as the development of garbage collection in high-level programming languages.

Customizability

The Dark platform makes a clear distinction between the platform itself and the user application, so self-sustainability is not an objective. The strict division between the platform and user (related to its aforementioned factoring of complexity) means that changes to Dark require modifying the platform source code itself, which is available under a license that solely allows using it for the purpose of contributing. Similarly, applications themselves are developed by modifying and adding code, requiring destructive access to it—so additive authoring is not exhibited at either level. Thanks to the integration of execution and development, persistent changes may be made during execution (c.f. staging of customization but this is done through the Dark editor, which is separate from the running service.

Exploring the design space

With a little work, technical dimensions can let us see patterns or gaps in the design space by plotting their values on a simple scatterplot. Here, we will look at two dimensions, notational diversity (this is simply uniformity of notations, but flipped in the opposite direction) and self-sustainability, for the following programming systems: Haskell, Jupyter notebooks, Boxer, HyperCard, the Web, spreadsheets, Lisp, Smalltalk, UNIX, and COLAs. 77

While our choice to describe dimensions as qualitative concepts was necessary for coming up with them, some way of generating numbers is clearly necessary for visualizing their relationships like this. For simplicity, we adopt the following scheme. For each dimension, we distill the main idea into several yes/no questions (as discussed in the appendix) that capture enough of the distinctions we observe between the systems we wish to plot. Then, for each system, we add up the number of "yes" answers and obtain a plausible score for the dimension.

The diagram (click for a bigger version) shows the results we obtained with our sets of questions. It shows that application-focused systems span a range of notational diversity, but only within fairly low self-sustainability. The OS-likes cluster in an "island" at the right, sharing identical notational diversity and near-identical self-sustainability.

There is also a conspicuous blank space at the top-right, representing an unexplored combination of high values on both dimensions. With other pairs of dimensions, we might take this as evidence of an oppositional relationship, such that more of one inherently means less of the other (perhaps looking for a single new dimension that describes this better.) In this case, though, there is no obvious conflict between having many notations and being able to change a system from within. Therefore, we interpret the gap as a new opportunity to try out: combine the self-sustainability of COLAs with the notational diversity of Boxer and Web development. In fact, this is more or less the forthcoming dissertation of the primary author.

Conclusions

There is a renewed interest in developing new programming systems. Such systems go beyond the simple model of code written in a programming language using a more or less sophisticated text editor. They combine textual and visual notations, create programs through rich graphical interactions, and challenge accepted assumptions about program editing, execution and debugging. Despite the growing number of novel programming systems, it remains difficult to evaluate the design of programming systems and see how they improve over work done in the past. To address the issue, we proposed a framework of “technical dimensions” that captures essential characteristics of programming systems in a qualitative but rigorous way.

The framework of technical dimensions puts the vast variety of programming systems, past and present, on a common footing of commensurability. This is crucial to enable the strengths of each to be identified and, if possible, combined by designers of the next generation of programming systems. As more and more systems are assessed in the framework, a picture of the space of possibilities will gradually emerge. Some regions will be conspicuously empty, indicating unrealized possibilities that could be worth trying. In this way, a domain of "normal science" is created for the design of programming systems.

Acknowledgments

We particularly thank Richard Gabriel for shepherding our submission to the 2021 Pattern Languages of Programming (PLoP) conference. We thank the participants of the PLoP Writers' Workshop for their feedback, as well as others who have proofread or otherwise given input on the ideas at different stages. These include Luke Church, Filipe Correia, Thomas Green, Brian Hempel, Clemens Klokmose, Geoffery Litt, Mariana Mărășoiu, Stefan Marr, Michael Weiss, and Rebecca and Allen Wirfs-Brock. We also thank the attendees of our Programming 2021 Conversation Starters session and our Programming 2022 tutorial/workshop entitled "Methodology Of Programming Systems" (MOPS).

This work was partially supported by the project of Czech Science Foundation no. 23-06506S.

Making dimensions quantitative

To generate numerical co-ordinates for self-sustainability and notational diversity, we split both dimensions into a small number of yes/no questions and counted the "yes" answers for each system. We came up with the questions informally, with the goal of achieving three things:

- To capture the basic ideas or features of the dimension

- To make prior impressions more precise (i.e. to roughly match where we intuitively felt certain key systems fit, but provide precision and possible surprises for systems we were not as confident about.)

- To be the fewest in number necessary to attain the above

The following sections discuss the questions, along with a brief rationale for each question. This also raises a number of points for future work that we discuss below.

Quantifying self-sustainability

Questions

- Can you add new items to system namespaces without a restart? The canonical example of this is in JavaScript, where "built-in" classes like

ArrayorObjectcan be augmented at will (and destructively modified, but that would be a separate point). Concretely, if a user wishes to make a newsumoperation available to all Arrays, they are not prevented from straightforwardly adding the method to the Array prototype as if it were just an ordinary object (which it is). Having to re-compile or even restart the system would mean that this cannot be meaningfully achieved from within the system. Conversely, being able to do this means that even "built-in" namespaces are modifiable by ordinary programs, which indicates less of a implementation level vs. user level divide and seems important for self-sustainability. - Can programs generate programs and execute them? This property, related to "code as data" or the presence of an

eval()function, is a key requirement of self-sustainability. Otherwise, re-programming the system, beyond selecting from a predefined list of behaviors, will require editing an external representation and restarting it. If users can type text inside the system then they will be able to write code---yet this code will be inert unless the system can interpret internal data structures as programs and actually execute them. - Are changes persistent enough to encourage indefinite evolution? If initial tinkering or later progress can be reset by accidentally closing a window, or preserved only through a convoluted process, then this discourages any long-term improvement of a system from within. For example, when developing a JavaScript application with web browser developer tools, it is possible to run arbitrary JavaScript in the console, yet these changes apply only to the running instance. After tinkering in the console with the advantage of concrete system state, one must still go back to the source code file and make the corresponding changes manually. When the page is refreshed to load the updated code, it starts from a fresh initial state. This means it is not worth using the running system for any programming beyond tinkering.

- Can you reprogram low-level infrastructure within the running system? This is a hopefully faithful summary of how the COLAs work aims to go beyond Lisp and Smalltalk in this dimension.

- Can the user interface be arbitrarily changed from within the system? Whether classed as "low-level infrastructure" or not, the visual and interactive aspects of a system are a significant part of it. As such, they need to be as open to re-programming as any other part of it to classify as truly self-sustainable.

System evaluation

| Question | Haskell | Jupyter | HyperCard | Subtext | Spreadsheets | Boxer | Web | UNIX | Smalltalk | Lisp | COLAs |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | |||||||||||

| 2 | |||||||||||

| 3 | |||||||||||

| 4 | |||||||||||

| 5 | |||||||||||

| Total | 0 | 0 | 1 | 0 | 1 | 1 | 2 | 4 | 4 | 4 | 5 |

Quantifying notational diversity

Questions

- Are there multiple syntaxes for textual notation? Obviously, having more than one textual notation should count for notational diversity. However, for this dimension we want to take into account notations beyond the strictly textual, so we do not want this to be the only relevant question. Ideally, things should be weighted so that having a wide diversity of notations within some narrow class is not mistaken for notational diversity in a more global sense. We want to reflect that UNIX, with its vast array of different languages for different situations, can never be as notationally diverse as a system with many languages and many graphical notations, for example.

- Does the system make use of GUI elements? This is a focused class of non-textual notations that many of our example systems exhibit.

- Is it possible to view and edit data as tree structures? Tree structures are common in programming, but they are usually worked with as text in some way. A few of our examples provide a graphical notation for this common data structure, so this is one way they can be differentiated from the rest.

- Does the system allow freeform arrangement and sizing of data items? We still felt Boxer and spreadsheets exhibited something not covered by the previous three questions, which is this. Within their respective constraints of rendering trees as nested boxes and single-level grids, they both provide for notational variation that can be useful to the user's context. These systems could have decided to keep boxes neatly placed or cells all the same size, but the fact that they allow these to vary scores an additional point for notational diversity.

System evaluation

| Question | Haskell | Jupyter | HyperCard | Subtext | Spreadsheets | Boxer | Web | UNIX | Smalltalk | Lisp | COLAs |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | |||||||||||

| 2 | |||||||||||

| 3 | |||||||||||

| 4 | |||||||||||

| Total | 0 | 1 | 1 | 2 | 2 | 3 | 3 | 1 | 1 | 1 | 1 |

Remarks and future work

This task of quantifying dimensions forced us to drill down and decide on more crisp definitions of what they should be. We recommend it as a useful exercise even in the absence of a goal like generating a graph.

It is worth clarifying the meaning of what we have done here. It must not be overlooked that this settling down on one particular definition does not replace or obsolete the general qualitative descriptions of the dimensions that we start with. Clearly, there are far too many sources of variation in our process to consider our results here as final, objective, the single correct definition of these dimensions, or anything in this vein. Each of these sources of variation suggests future work for interested parties:

Quantification goals

We sought numbers to generate a graph that roughly matched our own intuitive placement of several example systems. In other words, we were trying to make those intuitions more precise along with the dimensions themselves. An entirely different approach would be to have no "anchor" at all, and to take whatever answers a given definition produces as ground truth. However, this would demand more detail for answering questions and generating them in the first place.

Question generation

We generated our questions informally and stopped when it seemed like there were enough to make the important distinctions between example points. There is huge room for variation here, though it seems particularly hard to generate questions in any rigorous manner. Perhaps we could take our self-sustainability questions to be drawn from a large set of "actions you can perform while the system is running", which could be parametrized more easily. Similarly, our notational diversity questions tried to take into account a few classes of notations—a more sophisticated approach might be to just count the notations in a wide range of classes.

Answering the questions

We answered our questions by coming to a consensus on what made sense to the three of us. Others may disagree with these answers, and tracing the source of disagreement could yield insights for different questions that both parties would answer identically. Useful information could also be obtained from getting many different people to answer the questions and seeing how much variation there is.

What is "Lisp", anyway?

The final major source of variation would be the labels we have assigned to example points. In some cases (Boxer), there really is only one system; in others (spreadsheets) there are several different products with different names, yet which are still similar enough to plausibly analyze as the same thing; in still others (Lisp) we're treating a family of related systems as a cohesive point in the design space. It is understandable if some think this elides too many important distinctions. In this case, they could propose splits into different systems or sub-families, or even suggest how these families should be treated as blobs within various sub-spaces.

Good old programming systems

A programming system is an integrated set of tools sufficient for creating, modifying, and executing programs. The classic kind of programming system consists of a programming language with a text editor and a compiler, but programming systems can also be built around interactive graphical user interfaces, provide various degree of integration between the runtime execution environment and leverage graphical notations. Our notion accomodates examples from three broad classes of systems.

-

Systems based around languages. Software ecosystems built around a text-based programming language. They consist of a set of tools such as compilers, debuggers, and profilers, accessible either through a command-line or a development environment. Read more in the paper...

-

OS-like programming systems. Those that resemble an operating system in that they control both the execution environment and the resources of an entire machine. They provide a common interface for communication between programs and with the user. Read more in the paper...

-

Application-focused systems. Programmable applications, typically optimized for a specific domain, offering a limited degree of programmability, which may be increased with newer versions. Read more in the paper...

This page provides a brief overview of nine "Good Old Programming Systems" that feature as examples in our discussion. For each of the system, you can look at its characteristics, also available in the comparison matrix and references sections of the catalogue of technical dimensions where the system is discussed.

Adoptability Description and relations...

How does the system facilitate or obstruct adoption by both individuals and communities?

Dimensions

Adoptability

How does the system facilitate or obstruct adoption by both individuals and communities?

We consider adoption by individuals as the dimension of Learnability, and adoption by communities as the dimension of Sociability. For more information, refer to the two primary dimensions of this cluster.

Dimension: Learnability